2021

Compression in deep learning - an information theory perspective

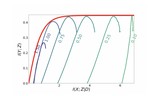

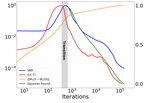

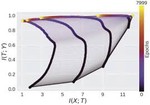

While DNNs have achieved many breakthroughs, our understanding of their internal structure, optimization process, and generalization is poor, and we often treat them as black boxes. We attempt to resolve these issues by suggesting that DNNs learn to optimize the Information Bottleneck (IB) principle - the tradeoff between information compression and prediction quality. In the first part of the talk, I presented this approach, showing an analytical and numerical study of DNNs in the information plane. This analysis reveals how the training process compresses the input to an optimal, efficient representation. I discussed recent works inspired by this analysis and show how we can apply them to real-world problems. In the second part of the talk, I will discuss information in infinitely-wide neural networks using recent results in Neural Tangent Kernels (NTK) networks. The NTK allows us to derive many tractable information-theoretic quantities. By utilizing these derivations, we can do an empirical search to find the important information-theoretic quantities that affect generalization in DNNs. I aslo presented the Dual Information Bottleneck (dualIB) framework, to find an optimal representation that resolves some of the drawbacks of the original IB. A theoretical analysis of the dualIB shows the structure of its solution and its ability to preserve the original distribution’s statistics. Within this, we focused on the variational form of the dualIB, allowing its application to DNNs.

2019

Information in Infinite Ensembles of Infinitely-Wide Neural Networks

Finding generalization signals using information for infinitely-wide neural networks.

2018

Representation Compression in Deep Neural Network

An information theoretic viewpoint on the behavior of deep networks optimization processes and their generalization abilities by the information plane and how compression can help.

On the Information Theory of Deep Neural Networks

Understanding Deep Neural Networks with the information bottleneck principle.

2017

Open the Black Box of Deep Neural Networks

Where is the information in deep neural networks? trying to find it by looking on the information plane.

0001

Analysis and Theory of Perceptual Learning in Auditory Cortex

Analysing perceptual learning of pure tones in the auditory cortex. Using a novel computational model, we show that overrepresentation of the learned tones does not necessarily improve along the training